Simple rule: If you can’t judge distances you shouldn’t drive. The problem: judging distances is anything but simple.

We humans, of course, have two high-resolution, highly synchronized visual sensors — our eyes — that let us to gauge distances using stereo-vision processing in our brain.

A comparable, dual-camera stereo vision system in a self-driving car, however, would be very sensitive. If the cameras are even slightly out of sync, it leads to what’s known as “timing misalignment,” creating inaccurate distance estimates.

That’s why we perform distance-to-object detection using data from a single camera. Using just one camera, however, presents its own set of challenges.

Before the advent of deep neural networks, a common way to compute distance to objects from single-camera images was to assume the ground is flat. Under this assumption, the three-dimensional world was modeled using two-dimensional information from a camera image. Optics geometry would be used to estimate the distance of an object from the reference vehicle.

That doesn’t always work in the real world, though. Going up or down a hill can cause an inaccurate result because the ground just isn’t flat.

Such faulty estimates can have negative consequences. Automated cruise control, lane change safety checks and lane change execution all rely on judging distances correctly.

A distance overestimate — determining that the object is further away than it is — could result in failure to engage automatic cruise control. Or even more critically, a failure to engage automatic emergency braking features.

Incorrectly determining an obstacle is closer than it is could result in other failures, too, such as engaging cruise control or emergency braking when they’re not needed.

Going the Distance with Deep Learning

To get it right, we use convolutional neural networks and data from a single front camera. The DNN is trained to predict the distance to objects by using radar and lidar sensor data as ground-truth information. Engineers know this information is accurate because direct reflections of transmitted radar and lidar signals pro precise distance-to-object information, regardless of a road’s topology.

By training the neural networks on radar and lidar data instead of relying on the flat ground assumption, we enable the DNN to estimate distance to objects from a single camera, even when the vehicle is going up or down hill.

In our training implementation, the creation and encoding pipeline for ground truth data is automated. So the DNN can be trained with as much data as can be collected by sensors — without manual labeling resources becoming a bottleneck.

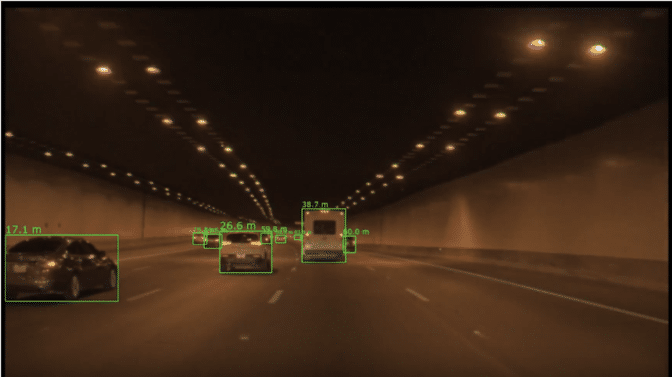

We use DNN-based distance-to-object estimation in combination with object detection and camera-based tracking for both longitudinal, or speeding up and slowing down, and lateral control, or steering. To learn more about distance-to-object computation using DNNs, visit our DRIVE Networks page.

The post To Go the Distance, We Built Systems That Could Better Perceive It appeared first on The Official NVIDIA Blog.